1 High-level Overview #

This chapter describes the components of an OBS installation and the typical administration tasks for an OBS administrator.

This chapter is not intended to describe special installation hints for a certain OBS version. Refer to the OBS download page for that.

1.1 OBS Components #

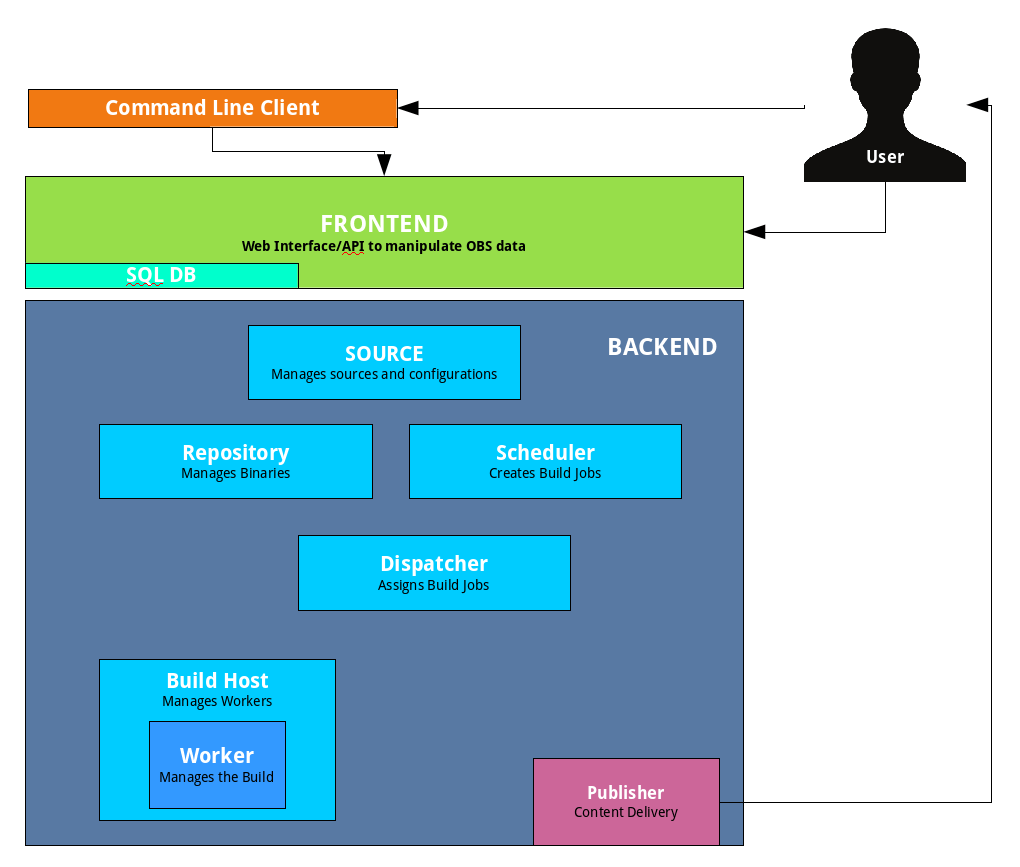

The OBS is not a monolithic server: it consists of multiple daemons that perform different tasks.

1.1.1 Front-end #

The OBS Front-end is a Ruby on Rails application that manages the access and manipulation of OBS data. It provides a web user interface and an application programming interface to do so. Both can be used to create, read, update and delete users, projects, packages, requests and other objects. It also implements additional sub-systems like authentication, search, and email notifications.

1.1.2 Back-end #

The OBS Back-end is a collection of Perl applications that manage the source files and build jobs of the OBS.

1.1.2.1 Source Server #

Maintains the source repository and project/package configurations. It provides an HTTP interface, which is the only interface to the front-end. It may forward requests to other back-end services. Each OBS installation has exactly one Source Server. It maintains the "sources", "trees" and "projects" directories.

1.1.2.2 Repository Server #

A repository server provides access to the binaries via an HTTP interface. It is used by the front-end via the source server only. Workers use the server to register, request the binaries needed for build jobs, and store the results. Notifications for schedulers are also created by repository servers. Each OBS installation has at least one repository server. A larger installation using partitioning has one on each partition.

1.1.2.3 Scheduler #

A scheduler calculates the need for build jobs. It detects changes in sources, project configurations or in binaries used in the build environment. It is responsible for starting jobs in the right order and integrating the built binary packages. Each OBS installation has one scheduler per available architecture and partition. It maintains the "build" directory.

1.1.2.4 Dispatcher #

The dispatcher takes a job (created by the scheduler) and assigns it to a free worker. It also checks possible build constraints to verify that the worker qualifies for the job. It only notifies a worker about a job; the worker itself downloads the required resources. Each OBS installation has one dispatcher per partition (one of which is the master dispatcher).

1.1.2.5 Publisher #

The publisher processes "publish" events from the scheduler for finished repositories. It merges the build result of all architectures into a defined directory structure, creates the required metadata, and optionally syncs it to a download server. It maintains the "repos" directory on the back-end. Each OBS installation has one publisher per partition.

1.1.2.6 Source Publisher #

The source publisher processes "sourcepublish" events from the publisher for published binary repositories. It needs to run on the same instance as the source server. It can be used to publish a filesystem structure providing all sources of published binaries. In case of images or containers this also includes the sources of used binary packages.

1.1.2.7 Worker #

The workers register with the repository servers. They receive build jobs from the dispatcher. Afterwards they download sources from source server and the required binaries from the repository server(s). They build the package using the build script and send the results back to the repository server. A worker can run on the same host as other services, but most OBS installations have dedicated hardware for the workers.

1.1.2.8 Signer #

The signer handles signing events and calls an external tool to execute the signing. Each OBS installation usually has one signer per partition and one on the source server installation.

1.1.2.9 Warden #

The warden monitors the workers and detects crashed or hanging workers. Their build jobs will be canceled and restarted on another host. Each OBS installation can have one Warden service running on each partition.

1.1.2.10 Download on Demand Updater (dodup) #

The download on demand updater monitors all external repositories which are defined as "download on demand" resources. It polls for changes in the metadata and re-downloads the metadata as needed. The scheduler will be notified to recalculate the build jobs depending on these repositories afterwards. Each OBS installation can have one dodup service running on each partition.

1.1.2.11 Delta Store #

The delta store daemon maintains the deltas in the source storage. Multiple obscpio archives can be stored in one deltastore to avoid duplication on disk. This service calculates the delta and maintains the delta store. Each OBS installation can have one delta store process running next to the source server.

1.1.3 Command Line Client #

The Open Build Service Commander (osc) is a Python application with a Subversion-style command-line interface. It can be used to manipulate or query data from the OBS through its application programming interface.

1.1.4 Content Delivery Server #

The OBS is agnostic about how you serve build results to your users. It will just write repositories to disk. But many people sync these repositories to some content delivery system like MirrorBrain.

1.1.5 Requirements #

We highly recommend, and in fact only test, installations on the SUSE Linux Enterprise Server and openSUSE operating systems. However, there also are installations on Debian and Fedora systems.

The OBS also needs a SQL database (MySQL or MariaDB) for persistent and a memcache daemon for volatile data.

1.2 OBS Appliances #

This chapter gives an overview over the different OBS appliances and how to deploy them for production use.

1.2.1 Server Appliance #

The OBS server appliance contains a recent openSUSE distribution with a pre-installed and pre-configured OBS front-end, back-end and worker. The operating system on this appliance adapts to the hardware on first boot and defaults to automatic IP and DNS configuration via DHCP.

1.2.2 Worker Appliance #

The OBS worker appliance includes a recent openSUSE distribution and the OBS worker component. The operating system on this appliance adapts to the hardware on first boot, defaults to automatic IP and DNS configuration via DHCP and OBS server discovery via SLP.

1.2.3 Image Types #

There are different types of OBS appliance images.

| File Name Suffix | Appliance for |

|---|---|

| .vdi | VirtualBox. |

| .vmdk |

VMware Workstation and Player. Note Our VirtualBox images are usually better tested. |

| .qcow2 | QEMU/KVM. |

| .raw | Direct writing to a block device |

| .tgz | Deploying via PXE from a central server |

1.2.4 Deployment #

To help you deploy the OBS server appliance to a hard disk there is a basic installer that you can boot from a USB stick. The installer can be found on the OBS Download page.

The image can be written to a USB stick to boot from it:

xzcat obs-server-install.x86_64.raw.xz > /dev/sdX/dev/sdX is the main device of your USB stick. Do NOT put it into a partition like /dev/sda1. If you use the wrong device, you will destroy all data on it!

How to deploy the other image types deeply depends on your virtualization setup. Describing this is out of scope for this guide, sorry.

1.2.5 Separating Data from the System #

For production use you want to separate the OBS data from operating system of the appliance so you can re-deploy the appliance without touching your OBS data. This can be achieved by creating an LVM volume group with the name "OBS". This volume group should be as large as possible because it is getting used by the OBS back-end for data storage and the OBS workers for root/swap/cache file systems. To create an LVM volume prepare a partition of type "8e" and create the LVM via

pvcreate /dev/sdX1vgcreate "OBS" /dev/sdX1

Additionally, if the OBS volume group contains a logical volume named “server”, it will be used as the data partition for the server.

lvcreate "OBS" -n "server"mkfs.xfs /dev/OBS/server

1.2.6 Updating the Appliance #

All images come pre-configured with the right set of repositories and

can be updated via the system tools YaST or zypper at any

time. Another way to update is to re-deploy the entire image.

If you re-deploy the entire image, keep in mind that you need to have

your data directory (/srv/obs) on a separate storage

(LVM volume, partition etc.) otherwise it will be deleted!

1.3 Back-end Administration #

1.3.1 Services #

You can control the different back-end components via

systemctl. You can enable/disable the service

during booting the system and start/stop/restart it in a running system.

For more information, see man page. For example, to restart the

repository server, use:

systemctl restart obsrepserver.service| Component | Service Name |

|---|---|

| Source Server | obssrcserver.service |

| Repository Server | obsrepserver.service |

| Scheduler | obsscheduler.service |

| Dispatcher | obsdispatcher.service |

| Publisher | obspublisher.service |

| Source Publisher | obssourcepublish.service |

| Worker | obsworker.service |

| Source Services | obsservice.service |

| Download On Demand Repository Meta Data Updates | obsdodup.service |

| Delta Storage | obsdeltastore.service |

| Signer | obssigner.service |

| Warden | obswarden.service |

1.3.2 Advanced Setups #

It makes sense to run some of the different components of the OBS back-end on isolated hosts.

1.3.2.1 Distributed Workers #

OBS workers can be very resource hungry. It all depends on the software that is being built, and how. Single builds deep down in the dependency chain can also trigger a sea of jobs. It makes sense to split off workers from all the other services so they do not have to fight for the same operating system/hardware resources. Here is an example on how to setup a remote OBS worker.

Install the worker package called obs-worker

Configure the OBS repository server address in the file /etc/sysconfig/obs-server. Change the variable OBS_REPO_SERVERS to the host name of the machine on which the repository server is running: OBS_REPO_SERVERS="myreposerver.example:5252"

Start the worker

1.4 Front-end Administration #

The Ruby on Rails application is run through the Apache web server with

mod_passenger.

You can control it via systemctl

systemctl {start, stop, restart} apache21.4.1 Delayed Jobs #

Another component of the OBS front-end are delayed jobs for

asynchronously executing longer tasks in the background. You can control

this service also via systemctl.

systemctl {start, stop, restart} obsapidelayed1.4.2 Full Text Search #

The full-text search for packages and projects is handled by Thinking

Sphinx. The delayed job daemon will take care of starting this

service. To control it after boot, use the

rake tasks it provides.

rake ts:{start, stop, rebuild, index}